Who remembers when the cloud was going to save your company tons of money?

The concept was simple: instead of buying hardware and storage to support your applications, you would provision only the resources you needed at that time and scale up or out based on new demand or new application deployment. When demand subsided, those “extra” resources would be deprovisioned. The cloud was supposed to be the end of excess capital expenditures and a way to flatten boom-or-bust IT hiring and firing cycles.

While good in theory, cost savings have eluded most cloud adopters. The 2022 KPMG U.S. Technological Survey found that 67% of respondents have failed to recognize a substantial ROI from their cloud investments.

Macroeconomic factors are forcing everyone to examine how they use their financial resources, including the way they spend on cloud computing. This pivot in how organizations are investing in the cloud is even impacting hyperscalers, as seen by the slowdown in AWS’s revenue growth in Amazon’s latest quarterly results. In fact, one analyst who covers Amazon said in a brief on the company that "Slowing cloud demand remains a key concern as businesses shift focus from accelerating cloud migration to optimizing cloud costs."

There is a myriad of ways to reduce your cloud expenses, but one of the easiest is to improve stewardship of cloud environments. It’s still common for organizations to provision more resources than they need, and it’s not unheard of for companies to forget to shut down a cloud environment that is no longer required. This is wasteful and negatively impacts your bottom line.

No matter how experienced a DevOps or SRE team member is, it is virtually impossible to continually assess the compute, storage, cluster, and database configurations required to deliver maximum performance at the lowest cost. Application Resource Management (ARM) is type of software that uses AI to take the guesswork out of cloud stewardship.

In a blog from last summer we showed that ARM products, such as IBM Turbonomic, were an integral part of insulating applications and users from performance issues related to not having enough cloud resources to meet varying application demand.

Turbonomic not only shows you the performance of every component in your application supply chain, but it also incorporates AI to take action on resource placement or starts workflows that optimize costs with cloud usage. It automatically examines a wide range of metrics, including vCPU, vMem, network and storage IO, throughput, reserved instance inventory, and cloud pricing. The platform then maximizes the placement of cloud resources exactly where and when you need them, without having to pay for excess capacity.

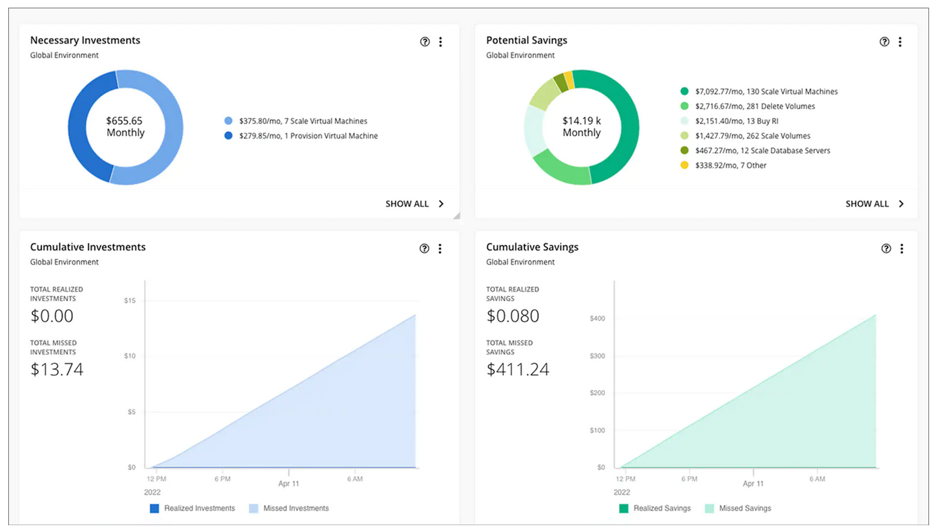

After accurately matching application demand to cloud infrastructure resources in real-time, Turbonomic provides a set of dashboards showing the impact of dynamic resource allocation to both performance and cost.

ARM platforms eliminate overallocation of cloud resources and save you money, while simultaneously improving Mean Time To Resolution (MTTR) of application performance issues. In addition, by helping you reduce electricity demand, ARM platforms provide demonstrable proof to reducing your carbon footprint and contributing to Green IT initiatives.

If you are interested in learning more about how LRS can help you reduce cloud costs while assuring application performance and automating IT operations, please contact us to request a meeting.

About the author

Steve Cavolick is a Senior Solution Architect with LRS IT Solutions. With over 20 years of experience in enterprise business analytics and information management, Steve is 100% focused on helping customers find value in their data to drive better business outcomes. Using technologies from best-of-breed vendors, he has created solutions for the retail, telco, manufacturing, distribution, financial services, gaming, and insurance industries.